Hi,

I’m making a controller script, but the scale of the controllers dont fit every mesh since they can be different sizes. Is there a way to adjust it automatically based on the size of the mesh?

//Dabbo

Hi,

I’m making a controller script, but the scale of the controllers dont fit every mesh since they can be different sizes. Is there a way to adjust it automatically based on the size of the mesh?

//Dabbo

With controllers, you mean controls for a rig?

But what you could do is get the bounding box size of the mesh and scale the controllers based on that. cmds.polyevaluate or cmds.xform can both return the min/max bounds you need to calculate it.

Yeah I mean controls for a rig. I will look into bounding boxes, ty.

You can do a ray cast using the Maya API using the location of your control as the source and a direction. The distance between the source and your hit point on the mesh can be used to scale the control. I would advice to calculate the distance from multiple directions to make sure your controls have the best possible size.

I’m not sure I understand where the location of the controller should be in this scenario. If the mesh is in origo and I create a controller, then it will also be in origo. Wouldn’t the distance be 0?

You might also need to inspect the skin weights to get the points which are influenced by a particular bone – the mesh bounding box will give you the entire character which is probably not what you want – or do I misunderstand?

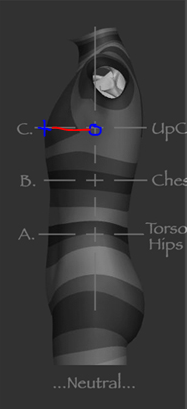

This image will explain the projection example better.

The blue circle is where you positioned the control, you then do a raycast to get a hit point on the mesh ( blue cross ), the difference in length between those two points ( red line ) can be used to scale the control to make sure it clears the geometry.

Original image: http://www.hippydrome.com

you might have trouble deciding where to send the rays in that scenario; you’d probably have to figure out the intended axis of the control, then shoot out rays on the plane normal to that axis, collecting the longest rays.

If you knew from other sources what a good secondary axis was, you could collect the longest rays on the plane along that axis and perpendicular to it – that would give you 2 degrees of scale for the control, which might be better

I thought maybe I could shoot rays along all axis and then compare the results. Let’s say I am making a controller for the elbow in a T-pose character facing positive Z-axis. Then the ray in X could hit a finger or something, while the rays in Z-, and Y-axis would hit the mesh around the elbow. This results in a big difference in distance between the X-ray and the Z-, and Y-rays. I would then scale based on the Z and Y ray distances.

Yes, you’ll need to have some way of figuring what the important direction is – otherwise you’ll get pretty confusing results.

It may be easier to combine this with the method of collecting vertices that are weighted to the bone you want to control – that shorter of the two results will be less prone to issues.

Alright! I think I know what to do now. Thanks for the help!

//Dabbo