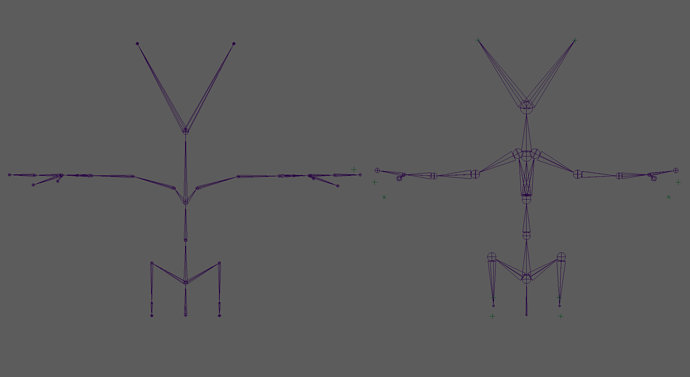

You are wanting to get the offset of nodeA and nodeB. Traditionally this is childNode and parentNode.

This is referred to as parent space in most environments ( some use the term local space, but that can get confusing as local space is its own thing ).

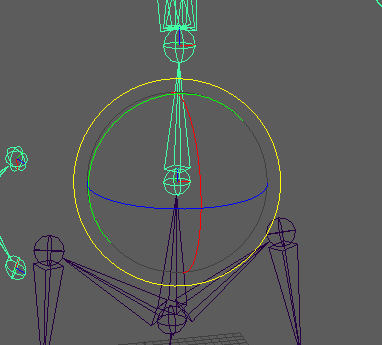

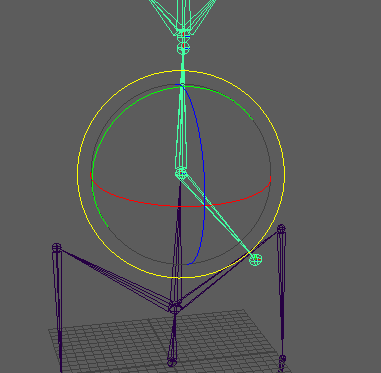

To get parent space, you will take the transforms ( which are always matrices ) in world space and multiply them with each other in a specific order depending on whether or not they are column or row major ( extra complication there but good to know there is a difference ).

So as I mentioned in my previous post, to get the offset of nodeA from nodeB you would multiply the transform of nodeA by the inverse of nodeB.

This gets you the transform of nodeA in parent space.

To get parent space transform back into world space, you then multiply said parent space transform by the world space transform of the parent node.

parent_space_matrix = child_node.transform * parent_node.transform.inverse()

world_space_matrix = parent_space_matrix * parent_node.transform

# Thus, world_space_matrix should be equal to child_node.transform, which is in world space:

world_space_matrix == child_node.transform

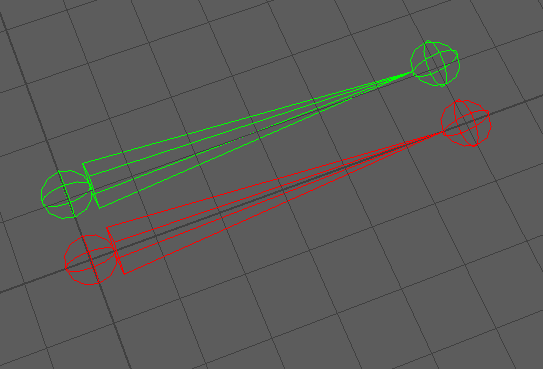

Those are the basic building blocks. You can swap out the parent transform for any node to get their offset. Using this you can retarget between any objects.

I am trying to refigure out the algorithm I used all those years ago but I don’t have a lot of spare time atm.

I think the bit that I had incorrect in my initial suggestion was:

Get ref pose of bones in target rig

This needs to be the reference pose of the bones in the target rig using the parent node of the corresponding bone in the source rig, with the translation set to zero.

That way you have the correct offset to apply to the target bone based on how the source has moved around it’s parent.

I am not great at explaining, but I will carry on writing up the example I am working on when I can and pass you the results ( unless you figure it out before I get there  )

)